Cheap deep-learning for photometric Supernova classification and beyond

Student: Tarek Allam

Supervisory Team: Dr. Jason McEwen (MSSL, CDT Director of Research), Prof. Ofer Lahav (Physics, Director of CDT), Dr. Denise Gorse (Computing)

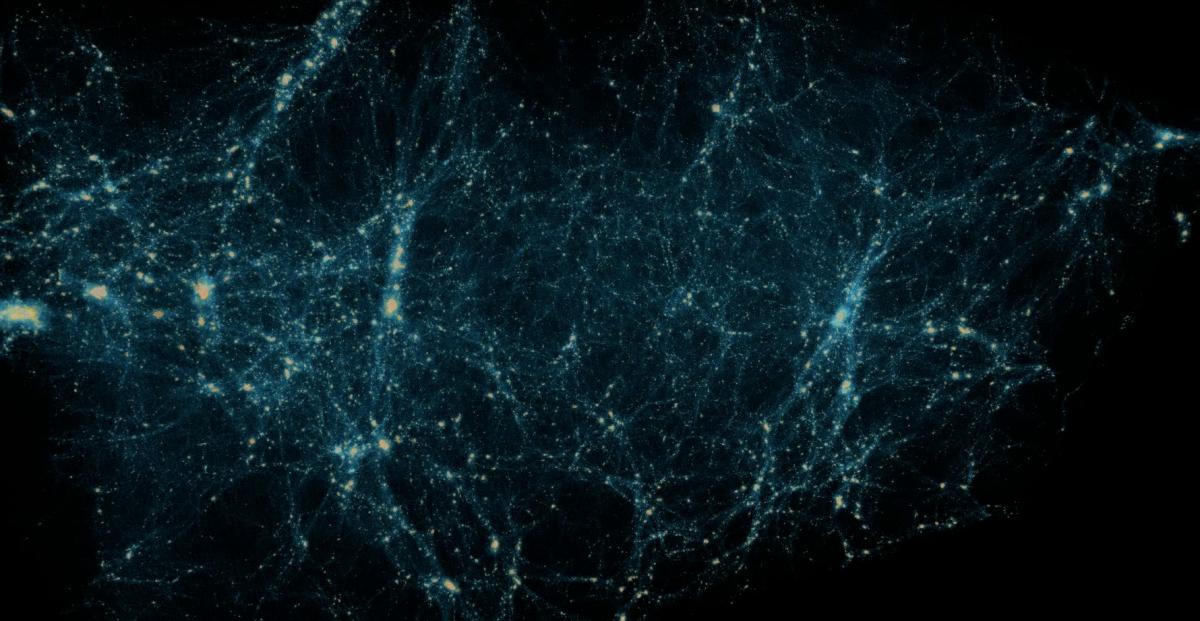

The Large Synoptic Survey Telescope (LSST) is predicted to observe millions of Supernovae (SNe) events over its lifetime, orders of magnitude beyond the number discovered by all previous astronomical surveys (of order a thousand SNe have been discovered to date). These SN observations will be used for a variety of cosmological studies, for example to examine the nature of dark energy. However, to be cosmologically useful, it is critical to classify SN observations into the type of event (e.g., runaway thermonuclear fusion, core collapse). Historically, SN cosmology has required costly and time consuming spectroscopic classification for every SN, which is simply impossible for the millions of events that will be discovered by LSST. Instead, SN cosmology with LSST will require photometric techniques, where classification must be performed with flux levels observed in a small number of broad photometric filter bands only. We will focus on cheap deep-learning techniques for photometric SN classification and, in particular, address the problem of small, non-representative training sets.